Note: These are speaking notes from a presentation I gave at SparkleCon in 2018. Much of the information is in the notes, so Slideshare only displays about 10%(the slides).

Nixon is a great security researcher and I agree wholeheartedly with the first half of this statement. Attackers are using botnets primarily for profit. Distributed Denial of Service(DDOS) as a primary source of income.

I respectfully disagree with the idea that the only solution is to turn to law enforcement. Unfortunately while Law enforcement has great powers of investigation and response after the fact, there are still a number of steps we can take to prevent attacks.

|

On Bots/Zombies

|

Attackers exploiting botnets to perform large scale DDOSes has become common. Bots, or Robots or Zombies, whatever are nodes in a network of malicious machines. Traditionally attackers either infected machines with malware or on a less automated fashion convince users to perform like bots.

LOIC is an example of software that allows users to participate in a DDOS. This is the simplest technique where each user is independent but collaborates with a multitude of like minded users. Imagine a hundred thousand individuals each with a single rifle all aiming at the same target. Some will miss. Some rifles will misfire. Some will never understand how to fire a bullet. Regardless a majority will hit the target. Of course the efficiency of such an attack is much less than one that eliminates human error.

Infecting numerous bots is sometimes only the first step. An attacker with hundreds of thousands or millions of bots needs to do something with them. They make no money lying idle. This is where another traditional technique is used, DOSaaS(Denial of Service as a Service). I have a botnet, you pay me to take your target down, we all profit. Except the major site we’ve just knocked down.

|

| Third party services increase as a market matures. |

Attackers are looking to make a profit. The usual methods work, but they can be costly. There is a need to purchase or develop one own’s malware to build up one’s botnet.

IoT botnets help to reduce those costs. Several IoT botnets have had their source code released by their authors.

Turns out security on IoT devices is severely lacking. No traffic control, no firewalls, no real authentication. Default credentials. Let me repeat that, default username/password combinations that users can’t easily change.

Mirai made the big splash, using a long list of default credentials to log in to embedded devices and then turning them into bots.

Having a list of default creds is useful, but more benevolent trespassers on one’s internet enabled cameras and home routers can also use the same in order to log in and patch your systems. Linux.Wifatch is famous for being a worm that connects to IoT devices and patches them, locking out the bad guys. Linux.Hajime does something similar, locking down ports preventing other botnets from connecting.

Good or bad, none of these worms would gain as much traction amongst IoT devices if it were possible to modify logins.

|

So, Brickerbot?

|

Right so, Brickerbot. Where Wifatch and Hajime do their part in securing devices by changing passwords or disabling outside access, Brickerbot goes about it in a slightly different manner. If we just brick all of these vulnerable IoT devices they can’t be turned against us. Great idea.

Mudge, of L0pht and DARPA, has heard about this idea too. From reasonable folks in the Intelligence Community and the Department of Defense. These are kind of the folks who get to take direct action. Except it seems even they couldn’t get away with bricking the devices of civilians in the US and all over the world.

The author of Brickerbot calls himself The Doctor. He has also posted on an underground forum as ‘Janitor’. Sometimes attribution is easy, like when an actor directly connects online identities or claims credit. Attribution is harder when all you have is the end result such as a binary or obfuscated script.

A source code release like the authors of Linux.Wifatch did is a primary method of claiming authorship. Releasing an obfuscated script that doesn’t or cannot execute is like showing off portions of a wrecked fighter aircraft with any and all markings or identifying information missing/removed. It makes for a great showpiece and allows one to take or (more often)give credit. Other researchers suggest that some of the attacks that The Doctor claimed, such as one on a major mobile carrier’s network, were not performed by him or at least not using any of the exploits contained in Brickerbot. Do we just take the word of whichever party we have a greater trust in? Minus a release of source or of forensic results from various attacks it seems that’s the default position.

Where are we?

- We may not be able to trust Brickerbot’s ‘author’

- The publicly available sample is essentially an obfuscated list of exploits and ‘bricking’ code

- We need to examine the Brickerbot code a little closer to see what we have

|

What's bricking?

|

Bricking is just turning useful devices into something as useful as a brick. This a perfectly legal action that one can perform on devices that one owns. When done to others, it is almost always illegal and occasionally an act of war.

To be clear, Brickerbot is intended to operate entirely on others’ devices.

|

Brickerbot source code is everywhere...

|

Normally coming from the malware analysis side, I’m loath to help spread malware. In this case, the cat is out of the bag, the horses have left the barn, and there are dozens of pastebin like sites and one or two github accounts with a copy of the released Brickerbot script.

So if you would like a copy of the script in order to play along at home/analyze just google for the following line:

“if 57 - 57: O0oo0OOOOO00 . Oo0 + IIiIii1iI * OOOoOooO . o0ooO * i1”

|

Malware analysis, simple? Not quite.

|

A quick aside about the malware analysis process. Assuming you’re not doing it as a hobby it’s almost always performed under pressure.

One never has enough time to do a complete teardown, to check every nook and cranny of a given target. You may love puzzles and even enjoy completely solving them, but you never have that time at work. When you eventually do, it’s known as that nearly mythical time period ‘vacation’.

An analyst always has to deal with multiple competing pressures from various interested parties. In no particular order:

- Customers

- Bosses/Upper Management

- Competitors

- Press

Depending on how one receives a sample one or more of these will be aware and the clock starts ticking. One won’t always satisfy all parties. Regardless, handling the various competing interests is the job.

Ok you don’t have unlimited time. A customer is under attack. The press is minutes from publishing. Higher ups are yelling at your boss for an update. What do you do?

Generally one searches for IOCs(Indicators of Compromise). It really does become: What is the least I need to see before I know my home/office/business is irrecoverable?

|

| Time to look at the sample |

What has The Doctor dropped in our collective laps?

We’ve got a script that appears obfuscated. No line listing the interpreter the shell should use. First steps for analyzing this malware:

1) Let’s see if it runs

a) Make sure the VM has no network access

b) Include possible runtimes(pyhton2, python3)

Shocker! It doesn't run. Why?

Running with Python3 fails. Mainly due to the print statement becoming a function in Python 3.

Thus we know it’s python 2.

Running under Python 2 it fails. Symbol not found. In this case due to additional whitespace turning a function call into an undefined symbol. Next, we need to remove extraneous whitespace.

Re-run and it fails again. This time due to not a single library being imported. You have to be kidding me. The script as provided was never intended to run.

2) Maybe de-obfuscating the script would simplify the analysis

- writing a custom de-obfuscator is a good solution, unfortunately it’s a vacation project. We’ve still got a job to do.

- It’s good to get acquainted with tokenizer.py for that eventual vacation

- We can still write a one-off script to quickly remove dead code. Dead code being things like If statements that are always false, or statements that make no changes to program state.

- Here’s where one gets to know PyLint on a first name basis. Statically checking for errors allows one to remove useless lines of code from the script.

- A final step is to pretty-print the script. Pretty-printing reformats source code so that it follows certain coding guidelines(e.g. Tabs not spaces, splitting multiple statements on one line over multiple lines, etc.)

After all those steps, it still doesn’t run. Assuming malware analysis is part of your job, you stopped trying to deobfuscate after the step with the unknown symbols. You then probably went straight to the next stages.

|

Initial steps

|

Now you’ve figured it’s non-functional. Now its time to find all low hanging fruit. In this case, the author provided the initial hint. Suggesting that one could just check the unencrypted/unobfuscated strings.

One of the first steps in statically analyzing all malware is to extract all strings. Really. Some of the best clues for attribution come from identifiers left in the code, or shout-outs to colleagues or malware researchers. Egos have led to a number of malware authors getting convicted.

In the case of Brickerbot, the simple obfuscation used by the author removes all identifiers(i.e. variable names, messages, etc.). It’s more about not having it tied back to the author than making it difficult to learn how the worm operates.

Another time-saver used in analysis is to read other analysts’ reports. This lets you see if you’ve missed anything important(e.g. malware emailing all your contacts). It also lets you re-direct analysis to portions of the malware not yet analyzed or to specific payloads.

With Brickerbot, since it won’t run and there’s much useless code an analyst can look at it as a container for various IoT device exploits

|

Cleaning up the code

|

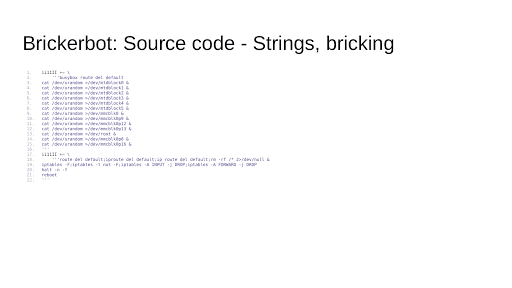

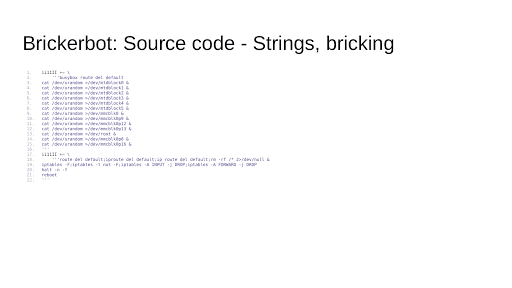

Let’s look at the Brickerbot source code.

Random, similar looking variable names, more whitespace than necessary. This looks horrible.

The if statements where the conditional is equivalent to 0 will never run the code that follows. Dead code.

There are spaces in function calls. These don’t even help readability so the sleep call on line 7 isn’t actually a .sleep() call, it’s just the undefined symbol time a dot and the undefined symbol sleep.

Much of this can be removed as described earlier with a custom script to delete all dead code.

|

| Some of this code is ... dead! |

Ok, now things are looking a bit cleaner, we can see functions. Names that have been obfuscated are lost, but one can eventually rename them according to their function. Similar to what one does with functions in an unknown binary.

This is also after pretty-printing the script so that we get rid of the excessive/additional whitespace.

Also like mentioned earlier, no libraries are imported so the code still won’t run.

You should be getting a better picture of the roadblocks placed in the code.

|

Back to searching for strings

|

Now we can go back to step 1 of the static malware analysis process, searching for strings.

This particular segment includes commands that overwrite storage on a particular device with random data. Routes and firewall rules are cleared from memory and deleted. Then the system is stopped and rebooted. By now there should be no code left to run so your home router is now useless.

So what do we know now?

- this code is annoying

- so is The doctor

- all devices with the default username and password will get disabled permanently

- Vulnerable devices are fixed now; since they can’t work, they’re safe

So smaller IoT/embedded devices are quite vulnerable and badly secured. Do bigger embedded systems and Internet connected devices face similar threats? Can a brickerbot for my washing machine be much far away? Would other larger devices be more vulnerable? Say my car?

|

"Zombie" cars

|

These days even cars are members of the Internet of Things. What’s the worst that can happen to my car? Can it become part of a botnet? Let’s see what an expert on automotive security thinks.

Charlie Miller is formerly of the NSA, but has made a name for himself in the private sector as a capable security researcher. Sorta reminds me of Star Trek’s Captain Picard, clever and wise.

He wrote the first public exploits for Android and iOS, on the Google G1 and original iPhone. Later he and Chris Valasek received a grant from DARPA’s Cyber Fast Track program(the same one founded by Mudge) to research automotive security. So he moved on from hacking PCs, to hacking phones, to hacking the very cars you and I drive.

And people complained, ‘but those aren’t remote exploits. You’ve got to be in the car to hack them. Any crook can do that.’

And verily, Charlie and Chris developed remote exploits.

So when Charlie says that

- it’s not simple to hack all the cars

- there aren’t as many people hacking cars

You’d do well to believe him.

|

"Hacker Dreads"

|

Except for movie hackers…

If you’ve seen the seminal ‘Hackers’(1996) you know that “real” hackers can be determined by their hairstyles. Dreadlocks, in fact possibly being a necessary factor in successful cyber attacks. E.g. Matthew Lillard’s character Cereal Killer. As one can see Ms. Theron’s character, Cypher, must be quite the criminal hacker.

After all, the main heroes in the 'Fate of the Furious'(Johnson, Diesel & Statham) lack both hair and computer skills.

Realistically one can get a better sense of the threat posed by Cypher by viewing her environment. She is obviously well-funded(either State-backed or via independent fortune) and employs a full team of experts. Especially experts that can hack cars.

When an underling tells her there are a couple thousand cars in the vicinity of her target, she orders him to hack all of them. Yes, all of them. Never mind the brand, the telematics system, any and all firmware(or lack of such) within each of these thousands of automobiles. Those hacker dreads must confer some extreme hacking powers.

|

"Bricking cars" the Hard Way

|

We should just brick everybody's car in a 5 mile radius. It’s not like anyone needs to get to work, pick up their kids, drive a buddy to the hospital, drive for Lyft/uber. Maybe they didn’t really need their personal cars.

This goes back to the original idea of abdicating any responsibility as developers and manufacturers of IoT devices to Law Enforcement. Or in the case of The Doctor, to vigilantes.

It's a common idea that fixing bugs at the earliest point in development is many times cheaper and less dangerous than patching them after release. Though we can’t always fix before customers take possession.

It’s possible to release firmware updates and patches afterwards, but there is not always incentive to do so. The manufacturer of my car will occasionally release updates for the telematics system living in my dash, but the manufacturer of my new Internet enabled Toaster might say it’s no longer supported.

This is not usually a problem. Until attackers not as benevolent as the Wifatch and Hajime authors or Charlie & Chris will discover and exploit a vulnerability to turn your new Mustang into a torpedo. With Miller & Valasek I know they’ll make an effort to reach out to the manufacturer and enable a patch or update to be created.

We can learn lessons from the way vulnerability reports are handled on other platforms. Embedded systems and IoT devices are not as dissimilar from desktop and server PCs as one would think. The same way security researchers reach out to Apple or Google for bugs in their phone OSes, they can reach out to various device manufacturers.

In theory.

|

Industry buy-in

|

It can be difficult to get industry members to agree on issues like vulnerability disclosure, regular patching and in general working with outside security researchers. It is very much like the common description of ‘herding cats’. While many of the players may look similar and even share similar interests it is difficult to get them all to come to the table.

Each company has no special reason to trust another. It usually takes commonly trusted individuals and backing from companies with greater resources just to begin the conversation.

Fortunately we’re seeing motion towards that goal in a small subset of the Internet of Things/Internet-connected embedded devices.

|

Steps to get Industry buy-in

|

How do we get all these cats eating peacefully at the same bowl?

We can start by selecting someone credible and trusted widely as a focal point for the multitude of players. Get a Pied Piper that all the cats can turn towards. In this case that would be Renderman(Brad Haines), a well known and respected security researcher. He’s also a CISSP.

Renderman has a wide range of experience with penetration testing, wireless security and computer security research. A published author and a speaker at numerous computer security(Black Hat USA) and hacker(Def Con) conferences.

The interesting part of this is that Renderman is operating within the category of items that fall under the heading ‘Adult Sex Toys’ on Amazon.com.

One might have expected that other personal IoT devices, such as fitness trackers, would be where more security research would occur. The recent information leak from Strava which showed running paths of US armed forces members is one such area of research. Here a personal IoT device’s lack of default security and privacy controls ends up violating Operational Security(OPSEC) at US bases around the world. The main threat is not the bases’ locations(arguably opposition forces and host countries already have that knowledge), but more that of current intelligence confirming activity at the bases and possibly number/names of active personnel.

Renderman has accomplished something that those of us in other specialties(e.g. Antivirus/Anti-malware) haven’t, he’s managed to convince disparate manufacturers to trust him; both as a source of best security practices and as an interface to the wider community of vulnerability researchers and hackers.

The key is for security researchers to have a path or some organized way to provide vulnerability information to manufacturers. We’ve seen on the desktop where there is a chilling effect when vendors sue or attempt to prosecute security researchers for their discoveries. Coming up with a set of guidelines so that researchers can talk to manufacturers and vice versa will help make all of us much more secure,

One more aspect that may have led to Renderman’s success at organizing vendors may be the use of branding. Others have found success in branding vulnerabilities(e.g. Shellshock,Krack, Spectre, etc.). As with various pharmaceuticals, end users and experts alike are more likely to remember a brand name than a numbered CVE. In the case of a project about internet connected adult toys, it is the ‘Internet of Dongs’ and vulnerabilities are assigned DVEs(Dong Vulnerability and Exposures). Despite the jocular naming, the project has had success in getting reported vulnerabilities handled and improved communication and cooperation with manufacturers. Other IoT vendors and the security research community can use it as a template for security of the millions of other Internet connected devices. Perhaps then we can see the end of the threat of IoT worms.

Questions

Q: What sort of threat model are IoT vendors using?

A: Good question. Default credentials implies there is no threat model. A basic threat model would take into account the simplest attacker, your common script kiddie. Take a dictionary of default credentials and list of target addresses and feed them to a scanner or ready made tool. Even after the number of in the wild IoT worms we’ve seen, the script kiddie would still end up with control of a significant number of IoT devices. Unfortunately security is currently at best an afterthought for a large number of vendors.

A proper threat model would need to take into account attackers of various skill levels and budgets.

Script kiddies might be kept at bay by simply allowing for users to easily change passwords. Or using public-key encryption to ensure firmware updates come only from the manufacturer. Which would also protect the device from script kiddies. Best practices in security will overall protect the bulk of users. If your threat model also includes Nation-State actors, then its likely your development budget is of a commensurate size.

It would also need to consider the attack surface for a given device. Does it connect to the Internet? Is the firmware protected from modification? Can an attacker cause the Li ion batteries to overload/ignite? Is it possible to inject malicious traffic into the stream of commands sent to the device?

Regardless, these are factors that need to be considered at the beginning of the development cycle and not once product is in the hands of consumers. Once there, it is considerably more expensive to mitigate(e.g by patching, or recalling devices).

Q: Would legislation help to increase security in IoT/Internet-connected embedded devices?

A: Legislation is insufficient. Mandating that IoT devices must be secure does not automatically make them so. While there is no such legislation that calls for Windows to be secure, the market, consumers, and the work of numerous vulnerability researchers have over the years, along with Microsoft, made Windows increasingly more secure.

What IoT and embedded developers can take from the example on the desktop is that similar mitigations can be applied to their products. And that adding security helps to reduce expenses and increases consumers’ trust in their products.